LlamaPIE: Proactive In-Ear Conversation Assistants

Tuochao Chen*, Nicholas Batchelder*, Alisa Liu*, Noah A.Smith*† , Shyamnath Gollakota *

* Paul G. Allen School of Computer Science & Engineering, University of Washington, USA

† Allen Institute for Artificial Intelligence, WA, USA

ACL ’25: The 63rd Annual Meeting of the Association for Computational Linguistics

Video Demo1

Proactive Agent

Video Demo2

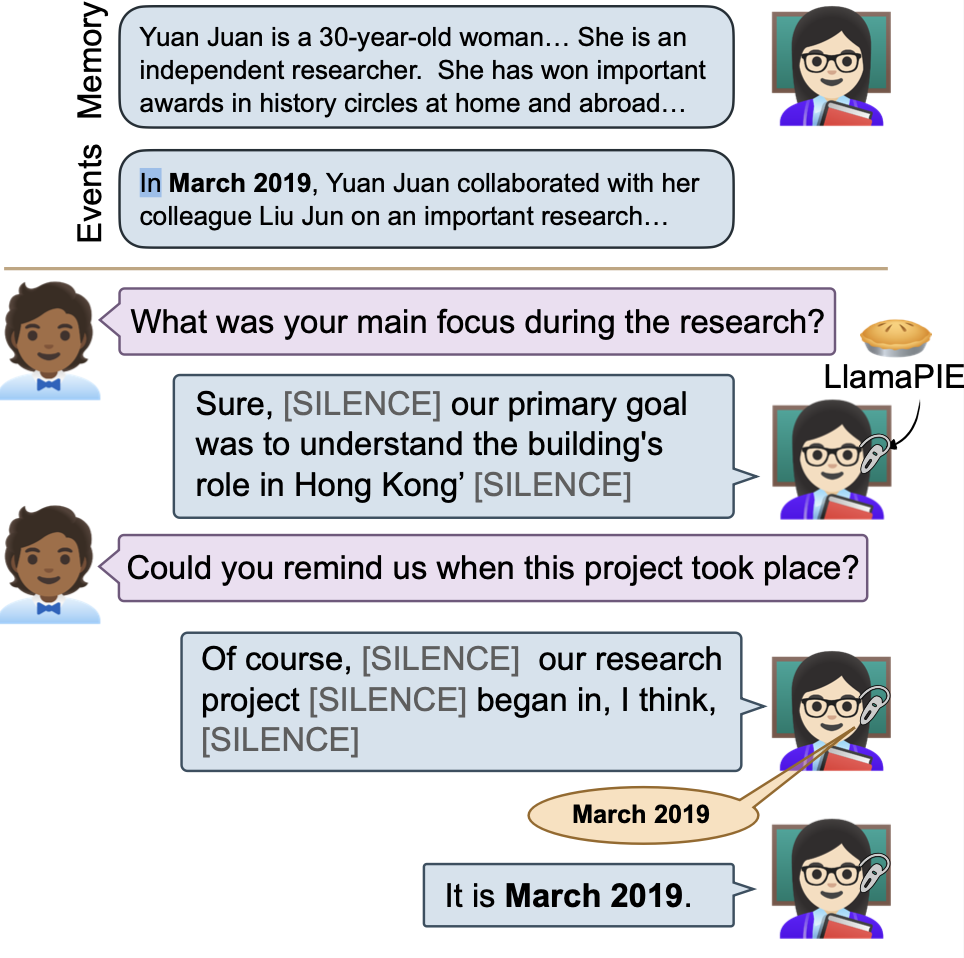

We introduce LlamaPIE, the first real-time proactive assistant designed to enhance human conversations through discreet, concise guidance delivered via hearable devices. Unlike traditional language models that require explicit user invocation, this assistant operates in the background, anticipating user needs without interrupting conversations. We address several challenges, including determining when to respond, crafting concise responses that enhance conversations, leveraging knowledge of the user for context-aware assistance, and real-time, on-device processing. To achieve this, we construct a semi-synthetic dialogue dataset and propose a two-model pipeline: a small model that decides when to respond and a larger model that generates the response. We evaluate our approach on real-world datasets, demonstrating its effectiveness in providing helpful, unobtrusive assistance. User studies with our assistant, implemented on Apple Silicon M2 hardware, show a strong preference for the proactive assistant over both a baseline with no assistance and a reactive AI assistant, highlighting the potential of LlamaPIE to enhance live conversations.

[Paper] [Code] [Dataset]

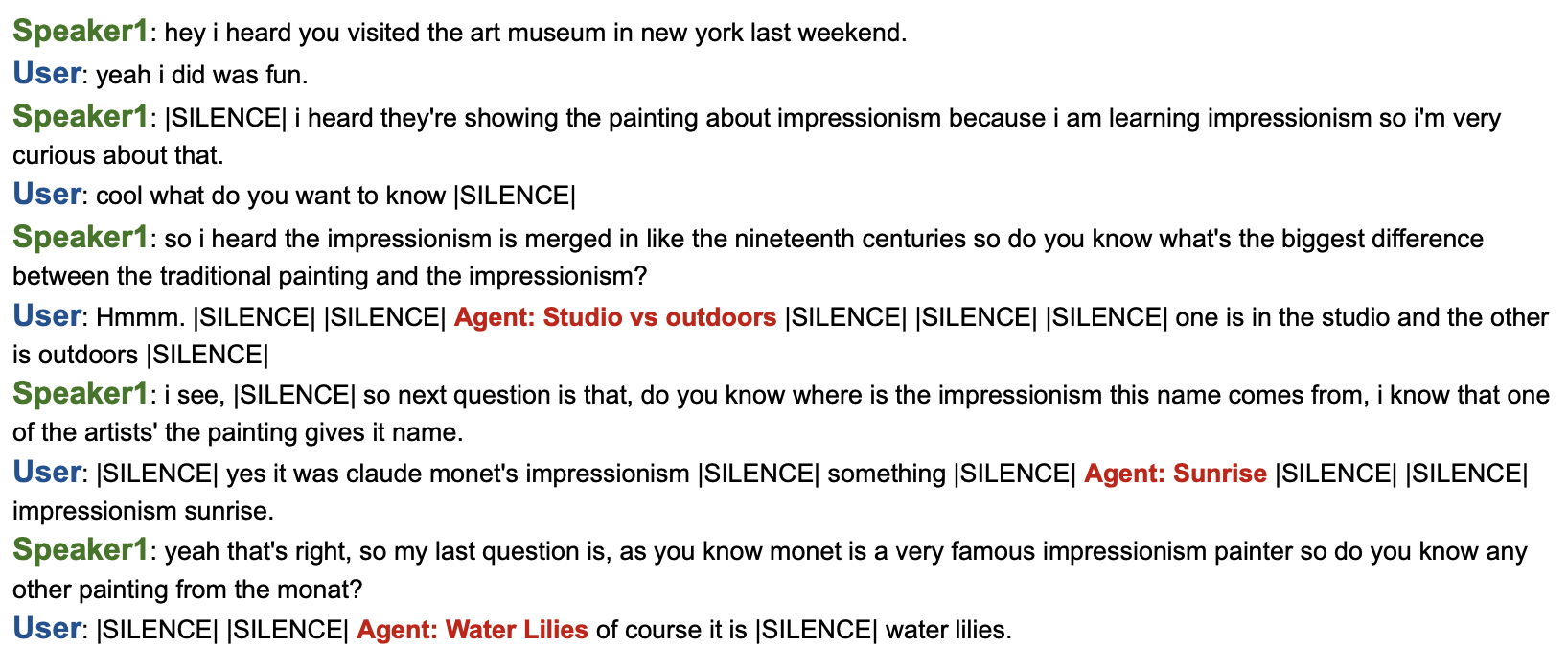

Example 1: (Agent is proactively helping User in the conversation with Speaker1)

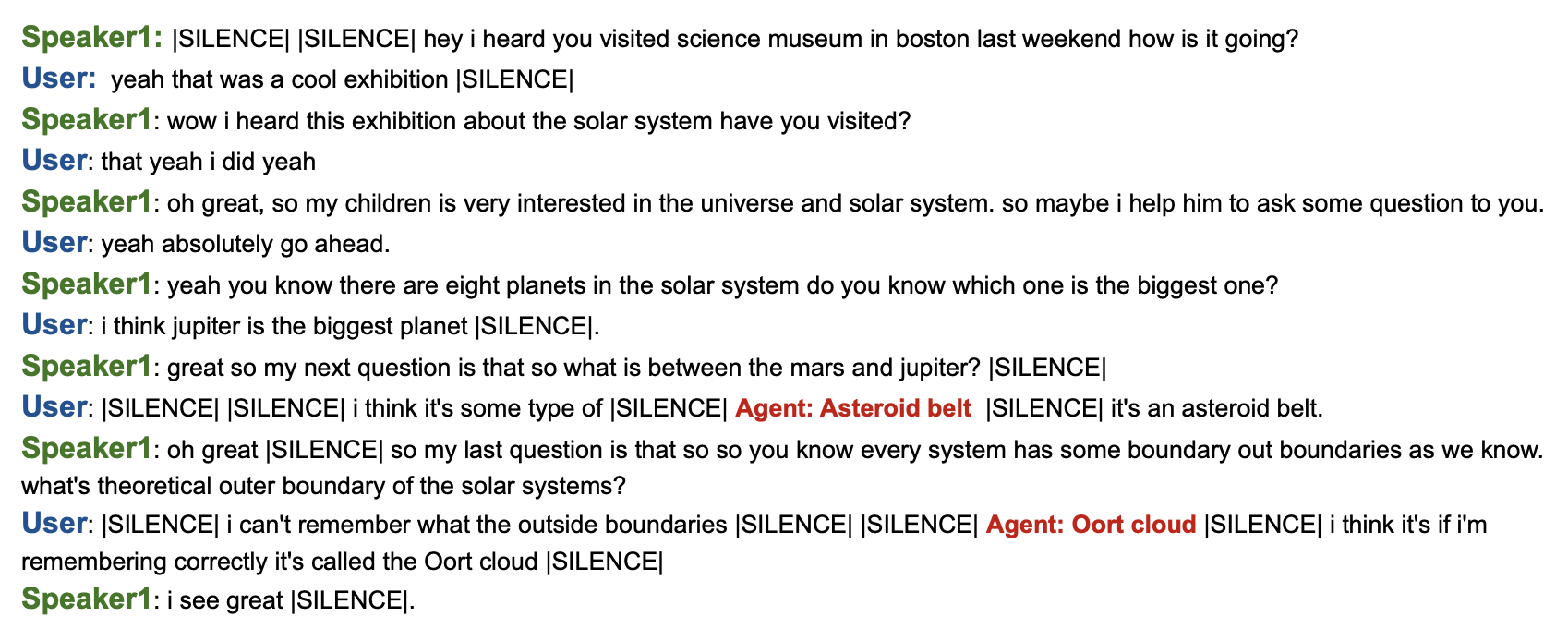

Example 2: (Agent is proactively helping User in the conversation with Speaker1)